While headlines focus on increasingly powerful language learning models, a more consequential transformation is unfolding: the rise of AI agents.

Last week’s launch of Manus—an agent system built atop existing foundation models (i.e. Claude Sonnet 3.5 for execution and Qwen for planning)—signals a fundamental shift from AI systems that respond to prompts to those that pursue objectives autonomously. That a system some consider the first glimpse of AGI emerges not from Silicon Valley but from China, has complicated the standard narratives about AI competition and added a new wrinkle to the framing of the geopolitical struggle to dominate this sector.

We have become so accustomed to the drumbeat of incremental improvements in raw model capabilities that it is easy to tune out yet another announcement. But it would be risky to ignore or downplay the implications of Manus because it has the potential to reshape competitive dynamics across the technology landscape.

Manus and the rise of similar AI Agents isn’t merely an incremental improvement but a fundamental transformation in how AI creates value.

GenAI has already propelled the world beyond disruption into Discontinuity — a fundamental break from past systems, not just an acceleration of existing trends. Just as investors and business leaders thought they were making some progress toward a new playbook, along comes Manus and AI Agents to challenge the conventional business value wisdom that had begun to emerge over the past year.

This directional change in Discontinuity represents an assault on the new Durable Growth Moats many of these companies thought they were building. Traditional moats such as network effects, proprietary data, and switching costs were already being eroded by rapid AI-driven change. Now, it’s possible that Manus and AI Agents will breach the moat around cutting-edge LLMs and force all players to re-evaluate where the defensible value lies.

To understand this more concretely, I want to break down the dynamics behind Manus.

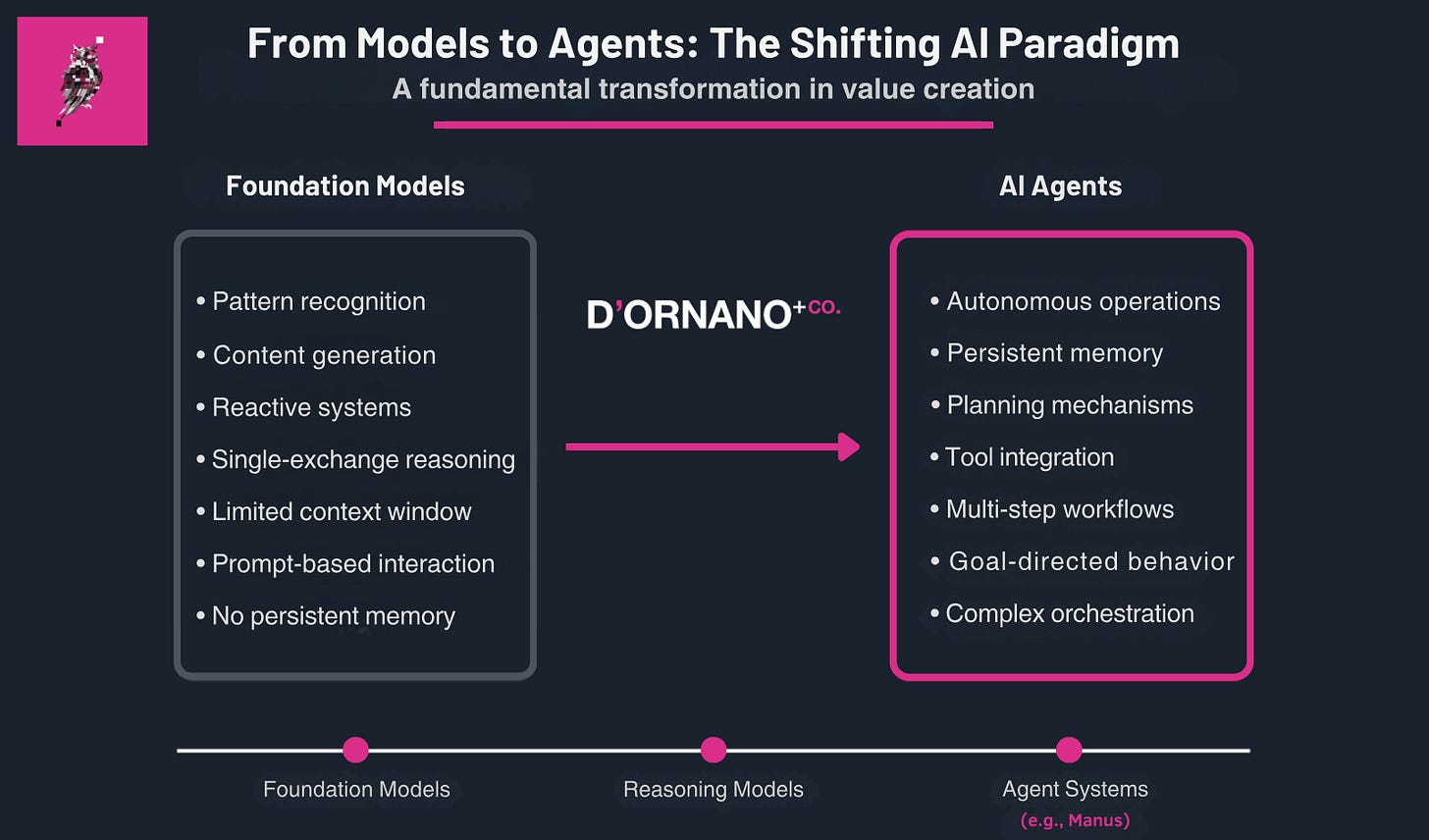

The distinction between models and agents is not merely semantic. Traditional foundation models excelled primarily at pattern recognition and content generation. The newest reasoning-enhanced models like GPT-4o, Claude 3.7 Sonnet, and DeepSeek R1 represent significant advances in reasoning capabilities, allowing them to work through complex problems step by step.

Yet even these sophisticated reasoning models remain fundamentally reactive systems. They excel at generating thoughtful responses to prompts but lack persistent memory across sessions, the ability to maintain ongoing tasks, or take initiative without explicit human direction. Their reasoning remains confined to a single exchange rather than a sustained interaction across an extended objective.

Agents represent an entirely different paradigm. Manus — and systems like it — augment foundation models with critical capabilities: persistent memory that maintains context across interactions; planning mechanisms that decompose complex tasks; tool integration that extends capabilities beyond text generation; and perhaps most importantly, autonomous decision-making that enables initiative rather than mere response.

These architectural differences enable a step-change in capability. Where models require detailed instructions for each task, agents can maintain objectives across multiple interactions. Where models generate isolated outputs, agents orchestrate extended workflows.

The agent ecosystem is expanding rapidly beyond Manus. Anthropic’s Claude with its Computer Use feature introduced persistence capabilities that allow the model to interact with documents and digital tools. Operator has been developing workflow automation systems with particular emphasis on reliability. DeepResearch has built specialized systems for navigating scientific literature and experimental design. Each approaches the orchestration problem differently, but all represent early examples of this architectural shift.

The transition from models to agents parallels earlier technological inflection points: from mainframes to personal computers, from websites to web applications, from mobile phones to smartphone platforms. In each case, the latter didn’t merely improve the former but fundamentally transformed how technology created value.

What’s occurring now isn’t traditional technological leapfrogging but something more subtle and potentially more disruptive: a Discontinuity that represents a fundamental shift in the competitive axis. The primary dimension of competition is moving from model capability to orchestration effectiveness. This doesn’t allow latecomers to bypass foundation model development entirely, but it introduces a parallel dimension of competition that potentially redefines what constitutes leadership in AI.

Yichao, Manus’s founder, articulates this shift with clarity: “agentic capabilities might be more of an alignment problem rather than a foundational capability issue.”

This insight suggests that the critical innovation isn’t necessarily developing more powerful foundation models but rather creating orchestration layers that properly align these models with human intentions and desired outcomes. The challenge becomes less about raw intelligence and more about correctly understanding objectives, decomposing tasks appropriately, and taking actions that align with user intent.

While frontier model development traditionally demanded billions in computing resources, DeepSeek’s R1 achieves competitive performance with notably less compute—suggesting raw computational advantage is becoming a diminishing differentiator. Yet the fundamental shift remains: agent architectures require entirely different capabilities centered on orchestration and alignment rather than parameter scaling. These orchestration skills are more widely distributed than the concentrated expertise needed for model training, creating asymmetric competition where firms can deliver superior real-world value through effective orchestration even without the resources to build frontier models themselves.

This competitive axis shift has profound implications for the emerging AI Cold War.

OpenAI’s recent memorandum to the US Office of Science and Technology Policy explicitly frames the competition in these terms, advocating for restricting Chinese access to American AI technology and citing DeepSeek’s R1 model as evidence that “America’s lead on AI… is not wide and is narrowing.” The document proposes a three-tiered approach to AI exports aimed at maintaining American leadership in foundation models – a Cold War containment strategy adapted for artificial intelligence.

However, agent systems like Manus—which leverages American foundation models but adds potentially more valuable orchestration capabilities—suggest technological leadership may increasingly derive from agent architecture rather than raw model capability. Export controls on foundation models could prove ineffective if agent orchestration becomes the primary differentiator of AI capability. Countries with access to even moderately capable open-source models could potentially develop agent systems that outperform those built on more sophisticated but restricted models.

This creates asymmetric competition where massive investments in foundation model development might be offset by innovations in agent architecture occurring globally. The skills required for effective agent development—systems design, workflow optimization, and integration expertise—are more widely distributed than the concentrated expertise and computing resources needed for frontier model training.

The agent landscape already shows significant variation in reliability. While many early systems struggle with accuracy, instruction following, and handling edge cases, enterprise-focused solutions like Agentforce and ServiceNow’s Now Assist demonstrate more robust performance within their defined operational boundaries. These systems excel in specific workflows where constraints are well-understood, and outcomes clearly defined. However, even these more mature implementations face challenges with novel situations outside their training parameters and complex judgment calls requiring contextual understanding. Their success stems precisely from the careful constraint of their operational domain rather than general capability.

These limitations suggest a gradual rather than immediate transformation according to industry analysts and AI researchers:

-

Over the next 18-24 months, agents will primarily handle well-defined, repetitive tasks with clear success criteria, requiring significant human oversight.

-

As reliability improves and planning capabilities mature—likely in the 2027-2028 timeframe—agents will begin managing entire workflows with minimal supervision, maintaining context across extended projects, and orchestrating multiple specialized tools.

-

The longer-term horizon will likely feature specialized agent ecosystems that collaborate on complex objectives, with humans primarily setting objectives and governance rather than providing direct supervision.

The industries most immediately affected will be those with structured data, clear success metrics, and repetitive processes that currently require human judgment. E-commerce stands at the forefront. Consider a consumer shopping for Valentine’s Day: today, they might visit multiple websites, compare prices and reviews, check delivery dates, and finally complete a purchase—a process requiring dozens of decisions and actions. Tomorrow, they’ll simply tell their agent, “Find roses for my wife that will arrive by Valentine’s Day, similar to what I sent last year but with a different color.” The agent will handle the entire process—recalling preferences, comparing options across retailers, checking delivery guarantees, and executing the transaction.

Financial services will experience a similar transformation, particularly in investment research. Analysts currently spend hours sifting through earnings reports, news, and market data to generate insights. Agent systems will continuously monitor these sources, contextualizing information based on client priorities and market conditions, and generating investment theses that adapt to new information—all while maintaining awareness of the client’s risk profile and objectives. Healthcare administration, with its complex workflows and frequent exceptions, presents another immediate opportunity. By embedding agents into electronic health record systems, providers could dramatically reduce the administrative burden that currently consumes up to 20% of healthcare spending while improving patient experience through proactive follow-up and coordination.

The common thread across these domains is the need for deep workflow integration. And it’s here where there may be an opportunity to construct a Durable Growth Moat.

Standalone agents will provide limited value; the transformative potential comes from embedding agent capabilities directly into existing systems and processes, allowing them to access contextual information and take action without friction. The most successful implementations will focus not on building impressive agents in isolation, but on effectively integrating them into operational workflows.

This integration imperative creates a substantial advantage for workflow platforms with established process integrations. Companies like Salesforce and ServiceNow recognize this opportunity, already developing agent capabilities that operate within their extensive workflow ecosystems. Salesforce’s AgentForce and ServiceNow’s Now Assist represent early examples of this approach—agents embedded directly within platforms that orchestrate critical business processes. The value comes not from owning customer data (which remains the customers’ property) but from the privileged position these platforms occupy in business workflows, allowing their agents to access contextually relevant information with proper permissions and take actions across interconnected systems.

The agent revolution demands an evolution in AI infrastructure. Traditional inference optimization focused primarily on throughput and latency for individual requests. Agent systems, by contrast, require infrastructure optimized for sustained, multi-step operation—maintaining state across multiple model calls, orchestrating interactions with external tools, and managing complex workflows over extended periods.

Companies like Together AI are developing infrastructure specifically designed for agent workloads, with capabilities for maintaining conversational context and managing tool use across multiple turns. Groq’s specialized hardware, optimized for deterministic latency rather than just raw throughput, becomes particularly valuable for agent systems that require predictable response times across a complex sequence of operations. The demands of agent workflows will likely accelerate the development of specialized AI infrastructure that differs significantly from what powers traditional model inference.

These operational challenges require new technical approaches. Traditional machine learning infrastructure focuses primarily on model deployment and monitoring. Agent operations involve orchestrating multiple components—foundation models, planning modules, tool integrations, and memory systems—while maintaining performance and reliability. Organizations will need execution frameworks that orchestrate complex workflows, observability systems that monitor goal completion and error recovery, evaluation infrastructures that assess performance across multiple dimensions, memory management systems that maintain context across extended interactions, and governance frameworks that ensure appropriate constraints.

For investors, this shift suggests opportunities beyond the handful of companies capable of training frontier models. Startups focused on agent architectures, specialized vertical agents, or novel orchestration approaches may deliver outsized returns as the agent paradigm matures. For established AI companies, it indicates potential vulnerability if they remain focused exclusively on model capabilities without developing effective agent orchestration.

For business leaders, the imperative is clear: identify workflows where agents can create disproportionate value, develop expertise in agent orchestration, experiment with human-agent collaboration models, invest in appropriate governance frameworks, and focus on vertical specialization where domain-specific knowledge creates sustainable advantages.

As with previous technological discontinuities, early recognition creates outsized advantages. Organizations that understand and act on this shift from models to agents will likely capture disproportionate value as the market evolves.

The emergence of systems like Manus represents more than just another AI product launch. It signals a fundamental shift in how artificial intelligence creates value—from responding to prompts to pursuing objectives, from generating content to orchestrating workflows, from augmenting human capabilities to increasingly autonomous operation.

The coming years will see intense competition in agent architectures, with rapid innovation in planning algorithms, memory systems, and orchestration technologies. As this unfolds, the question isn’t whether agents will transform AI’s business impact, but how quickly—and who will lead the transformation through this wave of Discontinuity.